Artificial intelligence (AI) and machine learning (ML) systems like ChatGPT and others are convenient and powerful tools that can be used to improve productivity, efficiency and customer satisfaction. Convenience, though, comes with some caveats. We want you to understand the risks of feeding sensitive data into these systems as well as the reliability of the data that comes out.

The 2023 MAD (ML/AI/Data) Landscape

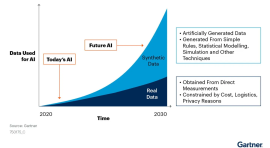

The AI landscape is evolving at a rapid -- even exponential -- pace, with frequent developments and new applications being released regularly. This is just a snapshot of apps available right now.

What’s going in?

What data is being shared with these tools, where does it go, and how is it being used?

Any data that is fed into an AI/ML system may be used to expand the training data set. Do not input prompts containing intellectual property or protected information such as FERPA or PII into any AI/ML system, such as ChatGPT.

AI relies on vast amounts of data to train and improve algorithms, raising concerns that the information it injests could be retrieved by anyone with the right prompt. This poses significant risk and could lead to an unintentional security breach of corporate information, privacy data or intellectual property.

What’s coming out?

What are the implications?

Be aware of the following pitfalls when using AI-generated content:

Intellectual property and copyright

Double check the intellectual property status of any response you get from ChatGPT, OpenAI, or other AI/ML system. Terms of service, end-user license agreements and other click-through documentation may restrict the ways you can legally use the output.

Several intellectual property creators have sued AI companies, alleging inappropriate use of creator data to train the AI models. For example, Getty Images sued Stability, the company behind the Stable Diffusion image generation tool, for alleged license violations, claiming Stability used Getty's image set for training its model.

The U.S. Copyright Office is conducting a study regarding the copyright issues raised by generative artificial intelligence.

Downright false information

Sometimes AI systems just make things up, generating answers that sound plausible without any basis in actual truth. A New York lawyer got into some trouble after he used ChatGPT for legal research and ended up submitting a brief that cited fake cases invented by AI.

Bias

AI systems are trained by the data that is fed into them. These data sets might be inclusive, unbiased and fully representative of the questions asked. Or they might be horribly biased, representing a tiny subset of data, and produce incredibly skewed results.

Be cautious about any demographic and personal information you input into AI systems, and approach any data that is output with a healthy dose of skepticism. Always run AI results through a filter of common sense and reality.

Takeaways

Limit Data Sharing

- Be mindful of the information you provide to AI systems.

- Never share sensitive, confidential or protected data.

- Get the approval of data owners before using non-public data in any AI tool.

Read Privacy Policies

- Familiarize yourself with the terms and conditions of the AI systems you use.

- Ensure that they follow best practices in data protection and adhere to relevant regulations.

Verify Data Outputs

- Use a human to double check all information that is generated by AI/ML.

- Be cognizant of the unreliabilty and possible bias of output.

- Understand that AI can and will make up facts (also known as AI Hallucinations).

Contact ITS for a Security Review

- Have ITS conduct a security review before sharing or using any University data with an AI tool.